If you're not using sbt or Maven yet, then perhaps give sbt a spin - it is really easy to set up a build.sbt file with a couple of library dependencies, and sbt has a degree of support for specifying which library version to use. Every other dependency will be pulled in during your build. If you are using sbt or Maven, then you can remove the assembly jar and simply add Spark 1.3.0 and Hadoop 2.4.0 as dependencies of your project. The one you have is an assembly jar, which means it includes every dependency used in the build that produced it. Make sure that the ‘Scala Compiler’ in ‘Properties for sparkScalaEclipseIDE’ project is set to the same version of scala in Spark 2.2.0 (this is scala 2.11 as opposed to scala 2. As noted here, Scala IDE requires every project to use the same library version:Ī better solution is to clean up the class path by replacing the Spark jar you are using. One way to fix the problem is to install a different version of Scala IDE, that ships with 2.10.4. The other copy has version 2.10.4 and is included in the Spark jar. One is explicitly configured as part of the project this is version 2.10.2 and is shipped with the Scala IDE plugins. The problem is (as stated in the warning) that your project has two Scala libraries on the class path.

If you already have Java 8 and Python 3 installed, you can skip the first two steps.

#HOW TO INSTALL SPARK LIBRARIES IN SCALA IDE WINDOWS 10#

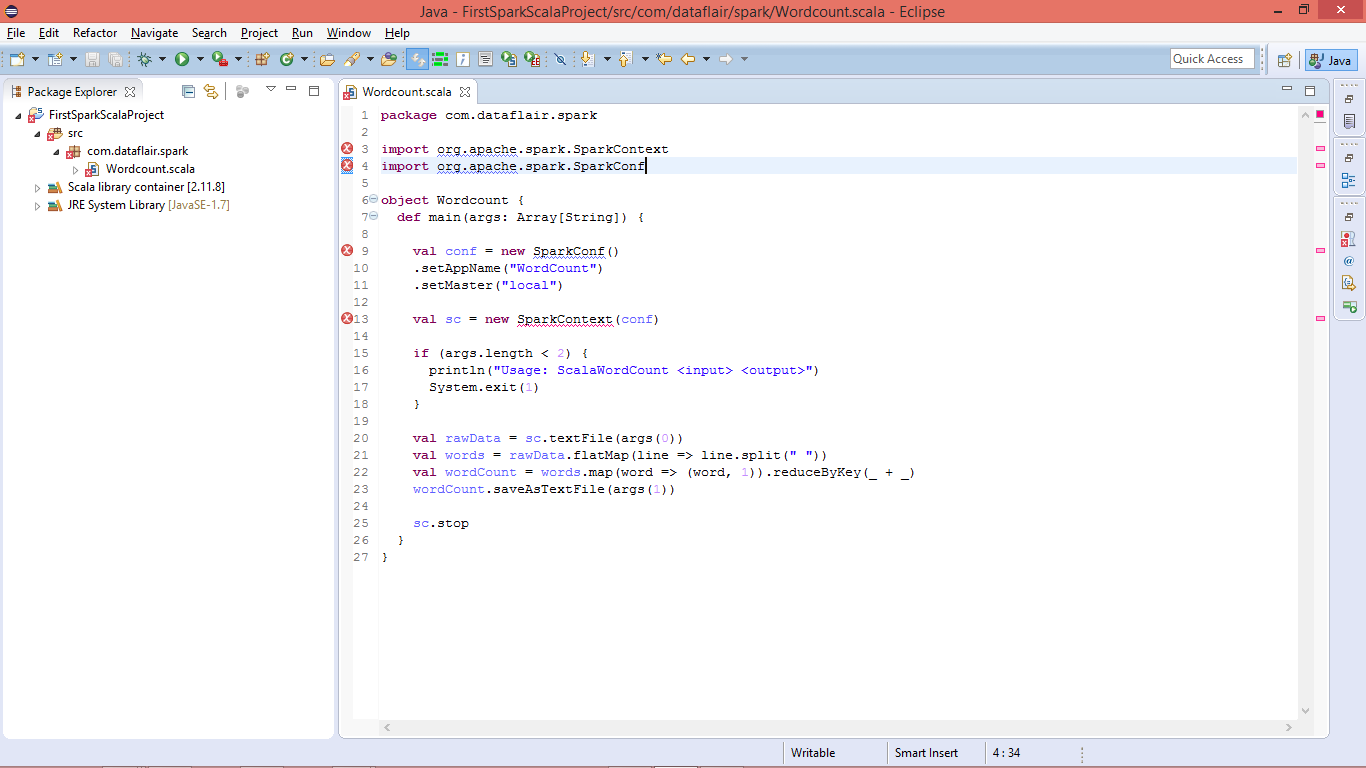

This is often a non-issue, especially when the version difference is small, but there are no guarantees. Installing Apache Spark on Windows 10 may seem complicated to novice users, but this simple tutorial will have you up and running. Is this a non-issue? Either way, how do I fix it? Except now this warning appears in the Eclipse console: The version of scala library found in the build path is different from the one provided by scala IDE:Ģ.10.4. Then I remove Scala Library from the build path, and it still works. This is not an optimal configuration, try to limit to one Scala library in the build path.įakeProjectName Unknown Scala Classpath Problem However, most newbies are facing issues to configure SCALA in IntelliJ Idea and using it with Spark,Spark SQL & GraphX. and this warning appears in the Eclipse console: More than one scala library found in the build path Scala Set Up on IntelliJ Idea IDE 1st SBT Scala Project with Spark, Spark SQL and GraphX IntelliJ Idea has recently become the most used IDE for writing well defined code. then I add spark-assembly-1.3.0-hadoop2.4.0.jar to the build path in Eclipse from New SparkConf().setMaster("local").setAppName("FakeProjectName")

0 kommentar(er)

0 kommentar(er)